AIサーバー試作品1.1(音声会話のみ)

AIサーバー試作品のDiscordがないバージョンです。会話しないと待機する機能を追加しました。

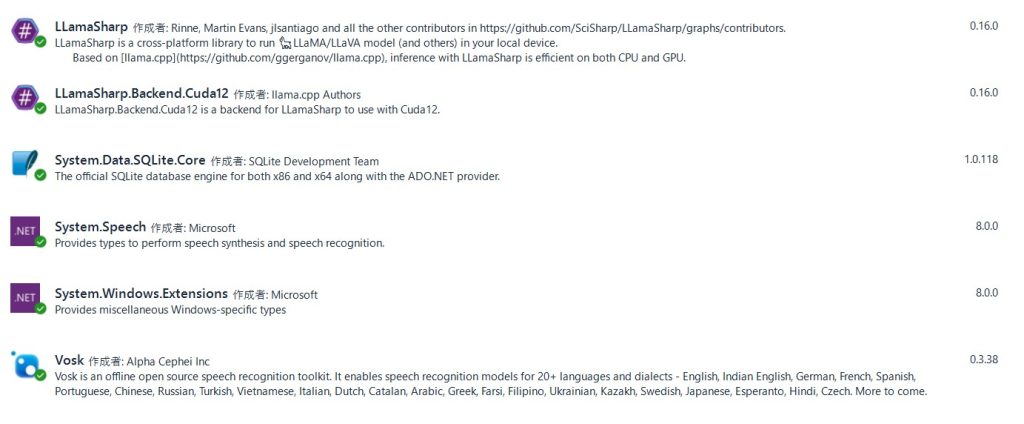

Nuget情報

概要

音声会話するだけのAIプログラム。5分話さないと答えなくなります。Awakeキーワードに指定された”まい”とか”まいちゃん”とか呼ばれると会話が再会できます。

コードの最初の方で音声ソフトの設定をしてください。対象は、CeVIOAIとVOICEVOXです。VOICEVOXの場合、プロセスにないと起動させるコマンドを追加していますが、どこにインストールされているのか探すコード書くのまんどくさいので直書きしています。自分の環境に合わせて修正してください。あと自分の環境はデバッグと本番のディレクトリ構成が違うため環境変数やらいっぱい使ってるので直書きに直してください。

Program.cs メイン

using LLama.Common;

using LLama;

using LLama.Sampling;

using System.Media;

using System.Net.Http.Headers;

using System.Diagnostics;

using System.Speech.Recognition;

using System.Text.Json;

using Vosk;

using System.Threading;

namespace ChatProgram

{

public class Program

{

static void Main(string[] args)

{

//コンソールアプリケーションからAsyncを呼び出す

Task task = MainAsync();

//終了を待つ

task.Wait();

}

private const int intVoice = 1; //0:なし 1:CeVIO AI 2:VoiceVox

//CeVIO AIパラメータ

private const string caCast = "双葉湊音";

//VoiceVoxパラメータ

private const int vxSpeaker = 8; //春日部つむぎ

private const int vxPort = 50021;

//Awake設定

static string[] AwakeWord = new string[] { "まい", "まいちゃん", "まいさん" };

private const int SilenceDuration = 300000; // 5分

private static System.Timers.Timer? silenceTimer;

private static async Task MainAsync()

{

bool Op = true; //本番フラグ

bool msg = false; //メッセージ表示

uint intContextSize = 4096;

// LLMモデルの場所

string strModelPath = Environment.GetEnvironmentVariable("LLMPATH", System.EnvironmentVariableTarget.User) + @"dahara1\gemma-2-27b-it-gguf-japanese-imatrix\gemma-2-27b-it.f16.Q5_k_m.gguf";

//Vosk設定

Vosk.Vosk.SetLogLevel(-1); //LogメッセージOFF

Model model = new Model(Environment.GetEnvironmentVariable("LLMPATH", System.EnvironmentVariableTarget.User)+@"vosk\vosk-model-ja-0.22");

try

{

string strChatlogPath = Environment.GetEnvironmentVariable("CHATDB", System.EnvironmentVariableTarget.User) + @"SkChatDB.db";

string strTable = "ch";

//チャットログ要約処理

Task task = HistorySummary.Run(strChatlogPath, intContextSize / 2, strTable);

task.Wait();

//チャットログシステム

ChatHistoryDB chtDB;

//AIのターンの時の待ちフラグ

bool Wt = false;

//タイマーとイベント定義

silenceTimer = new System.Timers.Timer(SilenceDuration);

silenceTimer.Elapsed += (sender, e) =>

{

Wt = true;

if (msg) Console.WriteLine("休止しました。");

silenceTimer.Stop();

};

Console.ForegroundColor = ConsoleColor.Blue;

//LLMモデルのロードとパラメータの設定

var modPara = new ModelParams(strModelPath)

{

ContextSize = intContextSize,

Seed = 1337,

GpuLayerCount = 24

};

LLamaWeights llmWeit = LLamaWeights.LoadFromFile(modPara);

LLamaContext llmContx = llmWeit.CreateContext(modPara);

InteractiveExecutor itrEx = new(llmContx);

//チャットログを読み込みます。

ChatHistory chtHis = new ChatHistory();

chtDB = new ChatHistoryDB(strChatlogPath, chtHis, strTable);

ChatSession chtSess = new(itrEx, chtHis);

var varHidewd = new LLamaTransforms.KeywordTextOutputStreamTransform(["User: ", "Assistant: "]);

chtSess.WithOutputTransform(varHidewd);

InferenceParams infPara = new()

{

SamplingPipeline = new DefaultSamplingPipeline()

{

Temperature = 0.9f,

//RepeatPenalty = 1.0f,

},

AntiPrompts = ["User:"],

//AntiPrompts = ["User:", "<|eot_id|>"], //Llama3用

MaxTokens = 256,

};

// SpeechRecognitionの設定

SpeechRecognitionEngine recognizer2 = new SpeechRecognitionEngine(new System.Globalization.CultureInfo("ja-JP"));

Choices awake = new Choices(AwakeWord);

GrammarBuilder gb = new GrammarBuilder(awake);

recognizer2.LoadGrammar(new Grammar(gb));

recognizer2.SpeechRecognized += (sender, e) =>

{

if (e.Result.Confidence > 0.9)

{

if (msg) Console.WriteLine($"{e.Result.Text}と呼ばれました!");

Wt = false;

silenceTimer.Stop();

silenceTimer.Start();

}

};

SpeechRecognitionEngine recognizer = new SpeechRecognitionEngine(new System.Globalization.CultureInfo("ja-JP"));

recognizer.LoadGrammar(new DictationGrammar());

// ▼▼▼ ここからSpeechRecognizedイベント定義 開始 ▼▼▼

recognizer.SpeechRecognized += async (sender, e) =>

{

if (!Wt)

{

Wt = true;

//RecognizedしたwaveをMemoryStreamに書き込み

MemoryStream st = new MemoryStream();

e.Result.Audio.WriteToWaveStream(st);

st.Position = 0;

// byte buffer

VoskRecognizer rec = new VoskRecognizer(model, 16000.0f);

rec.SetMaxAlternatives(0);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = st.Read(buffer, 0, buffer.Length)) > 0)

rec.AcceptWaveform(buffer, bytesRead);

string strUserInput = "";

// Json形式のResultからテキストを抽出

string jsontext = rec.FinalResult();

var jsondoc = JsonDocument.Parse(jsontext);

if (jsondoc.RootElement.TryGetProperty("text", out var element))

{

strUserInput = element.GetString() ?? "";

}

strUserInput = strUserInput.Replace(" ", "");

Console.ForegroundColor = ConsoleColor.White;

if (msg) Console.WriteLine($"User: {strUserInput}");

ChatHistory.Message msgText = new(AuthorRole.User, "User: "+ strUserInput);

if (Op) chtDB.WriteHistory(AuthorRole.User, "User: "+ strUserInput);

// 回答の表示

Console.ForegroundColor = ConsoleColor.Yellow;

string strMsg = "";

await foreach (string strText in chtSess.ChatAsync(msgText, infPara))

{

strMsg += strText;

}

//発信するときは「User:」や「Assistant:」を抜く

string strSndmsg = strMsg.Replace("User:", "").Replace("Assistant:", "").Replace("assistant:", "").Trim();

if (msg) Console.WriteLine("Assistant: " + strSndmsg);

if (Op) chtDB.WriteHistory(AuthorRole.Assistant, strMsg);

//音声ソフト選択

if (intVoice==1) CevioAI(strSndmsg);

if (intVoice==2)

{

if (Process.GetProcessesByName("VOICEVOX").Length == 0)

{

var vx = new ProcessStartInfo();

vx.FileName = @"E:\VOICEVOX\VOICEVOX.exe";

vx.UseShellExecute = true;

Process.Start(vx);

Thread.Sleep(2000);

}

Task task = Voicevox(strSndmsg);

task.Wait();

}

//MemoryStream破棄

st.Close();

st.Dispose();

Wt = false;

silenceTimer.Stop();

silenceTimer.Start();

}

};

// ▲▲▲ ここからSpeechRecognizedイベント定義 終了 ▲▲▲

// Configure input to the speech recognizer.

recognizer2.SetInputToDefaultAudioDevice();

// Start asynchronous, continuous speech recognition.

recognizer2.RecognizeAsync(RecognizeMode.Multiple);

// Configure input to the speech recognizer.

recognizer.SetInputToDefaultAudioDevice();

// Start asynchronous, continuous speech recognition.

recognizer.RecognizeAsync(RecognizeMode.Multiple);

// Timer Start

silenceTimer.Start();

Console.WriteLine("★★ マイクに向かって話してください ★★");

// Keep the console window open.

while (true)

{

Console.ReadLine();

}

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

private static void CevioAI(string strMsg)

{

try

{

dynamic service = Activator.CreateInstance(Type.GetTypeFromProgID("CeVIO.Talk.RemoteService2.ServiceControl2V40"));

service.StartHost(false);

dynamic talker = Activator.CreateInstance(Type.GetTypeFromProgID("CeVIO.Talk.RemoteService2.Talker2V40"));

talker.Cast = caCast;

dynamic result = talker.Speak(strMsg);

result.Wait();

//開放忘れるとメモリリーク

System.Runtime.InteropServices.Marshal.ReleaseComObject(talker);

System.Runtime.InteropServices.Marshal.ReleaseComObject(service);

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

private static async Task Voicevox(string strMsg)

{

MemoryStream? ms;

try

{

using (var httpClient = new HttpClient())

{

string strQuery;

// 音声クエリを生成

using (var varRequest = new HttpRequestMessage(new HttpMethod("POST"), $"http://127.0.0.1:{vxPort}/audio_query?text={strMsg}&speaker={vxSpeaker}&speedScale=1.5&prePhonemeLength=0&postPhonemeLength=0&intonationScale=1.16&enable_interrogative_upspeak=true"))

{

varRequest.Headers.TryAddWithoutValidation("accept", "application/json");

varRequest.Content = new StringContent("");

varRequest.Content.Headers.ContentType = MediaTypeHeaderValue.Parse("application/x-www-form-urlencoded");

var response = await httpClient.SendAsync(varRequest);

strQuery = response.Content.ReadAsStringAsync().Result;

}

// 音声クエリから音声合成

using (var request = new HttpRequestMessage(new HttpMethod("POST"), $"http://127.0.0.1:{vxPort}/synthesis?speaker={vxSpeaker}&enable_interrogative_upspeak=true&speedScale=1.5&prePhonemeLength=0&postPhonemeLength=0&intonationScale=1.16"))

{

request.Headers.TryAddWithoutValidation("accept", "audio/wav");

request.Content = new StringContent(strQuery);

request.Content.Headers.ContentType = MediaTypeHeaderValue.Parse("application/json");

var response = await httpClient.SendAsync(request);

// 音声を保存

using (ms = new MemoryStream())

{

using (var httpStream = await response.Content.ReadAsStreamAsync())

{

httpStream.CopyTo(ms);

ms.Flush();

}

}

}

}

ms = new MemoryStream(ms.ToArray());

//読み込む

var player = new SoundPlayer(ms);

//再生する

player.PlaySync();

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

}

}

ChatHistoryDB.cs SQLiteでチャット履歴を管理

using LLama.Common;

using System.Data.SQLite;

namespace ChatProgram

{

class ChatHistoryDB

{

ChatHistory? chtHis;

string strDbpath;

Dictionary<string, AuthorRole>? Roles = new Dictionary<string, AuthorRole> { { "System", AuthorRole.System }, { "User", AuthorRole.User }, { "Assistant", AuthorRole.Assistant } };

string strTable;

public ChatHistoryDB(string strDbpath, ChatHistory chtHis, string strTable)

{

this.chtHis= chtHis;

this.strDbpath= strDbpath;

this.strTable= strTable;

try

{

var conSb = new SQLiteConnectionStringBuilder { DataSource = strDbpath };

var con = new SQLiteConnection(conSb.ToString());

con.Open();

using (var cmd = new SQLiteCommand(con))

{

cmd.CommandText = $"CREATE TABLE IF NOT EXISTS {strTable}(" +

"\"sq\" INTEGER," +

"\"dt\" TEXT NOT NULL," +

"\"id\" TEXT NOT NULL," +

"\"msg\" TEXT," +

"\"flg\" INTEGER DEFAULT 0, PRIMARY KEY(\"sq\"))";

cmd.ExecuteNonQuery();

cmd.CommandText = $"select count(*) from {strTable}";

using (var reader = cmd.ExecuteReader())

{

//一行も存在しない場合はシステム行をセットアップ

long reccount = 0;

if (reader.Read()) reccount = (long)reader[0];

reader.Close();

if (reccount<1)

{

//Assistantの性格セットアップ行追加

cmd.CommandText = $"insert into {strTable}(dt,id,msg) values(datetime('now', 'localtime'),'System','あなたは優秀なアシスタントです。')";

cmd.ExecuteNonQuery();

//要約行追加

cmd.CommandText = $"insert into {strTable}(dt,id,msg) values(datetime('now', 'localtime'),'System','')";

cmd.ExecuteNonQuery();

}

}

cmd.CommandText = $"select * from {strTable} where flg=0 order by sq";

using (var reader = cmd.ExecuteReader())

{

while (reader.Read())

{

if (chtHis is null) chtHis=new ChatHistory();

chtHis.AddMessage(Roles[(string)reader["id"]], (string)reader["msg"]);

}

}

}

con.Close();

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

public interface IDisposable

{

void Dispose();

}

public void WriteHistory(AuthorRole aurID, string strMsg, bool booHis = false)

{

try

{

var conSb = new SQLiteConnectionStringBuilder { DataSource = strDbpath };

var con = new SQLiteConnection(conSb.ToString());

con.Open();

using (var cmd = new SQLiteCommand(con))

{

if (booHis)

{

if (chtHis is null) chtHis=new ChatHistory();

chtHis.AddMessage(aurID, strMsg);

}

cmd.CommandText = $"insert into {strTable}(dt,id,msg) values(datetime('now', 'localtime'),'{Roles.FirstOrDefault(v => v.Value.Equals(aurID)).Key}','{strMsg}')";

cmd.ExecuteNonQuery();

}

con.Close();

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

}

}

HistorySummary.cs 長くなった会話を要約して圧縮

要約には「DataPilot-ArrowPro-7B-RobinHood」が優秀なので使ってます

using LLama.Common;

using LLama;

using System.Data.SQLite;

namespace ChatProgram

{

internal class HistorySummary

{

public static async Task Run(string strChatlogPath, uint HistoryMax, string strTable)

{

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("**Start History Summary**");

try

{

// LLMモデルの場所

string strModelPath = Environment.GetEnvironmentVariable("LLMPATH", System.EnvironmentVariableTarget.User) + @"mmnga\DataPilot-ArrowPro-7B-RobinHood-gguf\DataPilot-ArrowPro-7B-RobinHood-Q8_0.gguf";

// ChatDBから行を読み込む

string strChtHis = "";

uint i = 0;

uint intCtxtSize = 4096; //一度に処理できる文字数

long svSq = 0; //処理を開始したSq

long svSqMax = 0; //処理を終了したSq

string strSav = ""; //処理前の要約

var conSb = new SQLiteConnectionStringBuilder { DataSource = strChatlogPath };

var con = new SQLiteConnection(conSb.ToString());

con.Open();

using (var cmd = new SQLiteCommand(con))

{

//有効文字数をカウント

cmd.CommandText = $"select sum(length(msg)) from {strTable} where flg=0";

using (var reader = cmd.ExecuteReader())

{

reader.Read();

//Console.WriteLine($"文字数:{reader[0]}");

//チャット履歴の最大行に満たないか、最大文字数を越えても処理できる文字数が充分でない場合は処理をしない

if ((long)reader[0] < (long)HistoryMax || (long)reader[0] - (long)HistoryMax < (long)intCtxtSize)

{

con.Close();

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("**E.N.D History Summary**");

return;

}

}

//有効行を呼んで要約する

cmd.CommandText = $"select * from {strTable} where flg=0 order by sq";

string strCht = "";

using (var reader = cmd.ExecuteReader())

{

while (reader.Read())

{

if ((string)reader["id"] != "System")

{

strCht = (string)reader["id"] + ": " + ((string)reader["msg"]).Replace("User:", "").Replace("Assistant:", "").Replace("assistant:", "").Trim() + "\n";

i += (uint)strCht.Length;

if (i > intCtxtSize) { break; }

strChtHis += strCht;

}

//システム行の要約を退避する

if ((string)reader["id"] == "System" && (long)reader["sq"] == 2) strSav = (string)reader["msg"];

//システム行以外で処理を開始した行を記録

if ((string)reader["id"] != "System" && svSq == 0)

{

svSq = (long)reader["sq"];

}

svSqMax = (long)reader["sq"];

}

}

}

//LLMの処理

Console.ForegroundColor = ConsoleColor.Blue;

//LLMモデルのロードとパラメータの設定

ModelParams modPara = new(strModelPath)

{

ContextSize = intCtxtSize,

GpuLayerCount = 24

};

ChatHistory chtHis = new ChatHistory();

chtHis.AddMessage(AuthorRole.System, "#命令書\n" +

"・あなたは優秀な編集者です。\n" +

"・ユーザーとアシスタントの会話を要約してください。\n" +

"#条件\n" +

"・重要なキーワードを取りこぼさない。\n" +

"#出力形式\n" +

"・例)要約: ふたりは親密な会話しました。");

using LLamaWeights llmWeit = LLamaWeights.LoadFromFile(modPara);

using LLamaContext llmContx = llmWeit.CreateContext(modPara);

InteractiveExecutor itrEx = new(llmContx);

ChatSession chtSess = new(itrEx, chtHis);

var varHidewd = new LLamaTransforms.KeywordTextOutputStreamTransform(["User:", "Assistant:"]);

chtSess.WithOutputTransform(varHidewd);

InferenceParams infPara = new()

{

Temperature = 0f,

AntiPrompts = new List<string> { "User:" }

};

// ユーザーのターン

Console.ForegroundColor = ConsoleColor.White;

string strInput = strChtHis;

ChatHistory.Message msg = new(AuthorRole.User, strInput);

// AIのターン

Console.ForegroundColor = ConsoleColor.Magenta;

string strMsg = "";

await foreach (string strAns in chtSess.ChatAsync(msg, infPara))

{

Console.Write(strAns);

strMsg += strAns;

}

using (var cmd = new SQLiteCommand(con))

{

//sq=1に要約内容を更新する(要約という文字、改行、空白を削除)

string strSql = $"update {strTable} set msg= '{strSav +"\n"+ strMsg.Replace("要約:", "").Replace("\r", "").Replace("\n", "").Trim()}' where sq=2 and id='System'";

//Console.Write (strSql+"\n");

cmd.CommandText = strSql;

cmd.ExecuteNonQuery();

//処理した行を論理削除する

strSql = $"update {strTable} set flg=1 where (sq between {svSq} and {svSqMax}) and id<>'System' and flg=0";

//Console.Write(strSql+"\n");

cmd.CommandText = strSql;

cmd.ExecuteNonQuery();

}

//DBクローズ

con.Close();

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("**E.N.D History Summary**");

}

}

}

ずっと立ち上げるならスケジューラで再起動すると要約が走るのでいいかも

PowerShellコマンドの参考例

AI_Server01.ps1

stop-process -Name "アプリのプロセス名"

Start-Sleep -Seconds 3

Start-Process -FilePath "アプリパス+EXE名"