VoskでLLMと音声会話する

前回記事でVoskは処理が早いので会話に使えそうなのがわかりました。そこでLLMを組み込んで音声会話してみました。

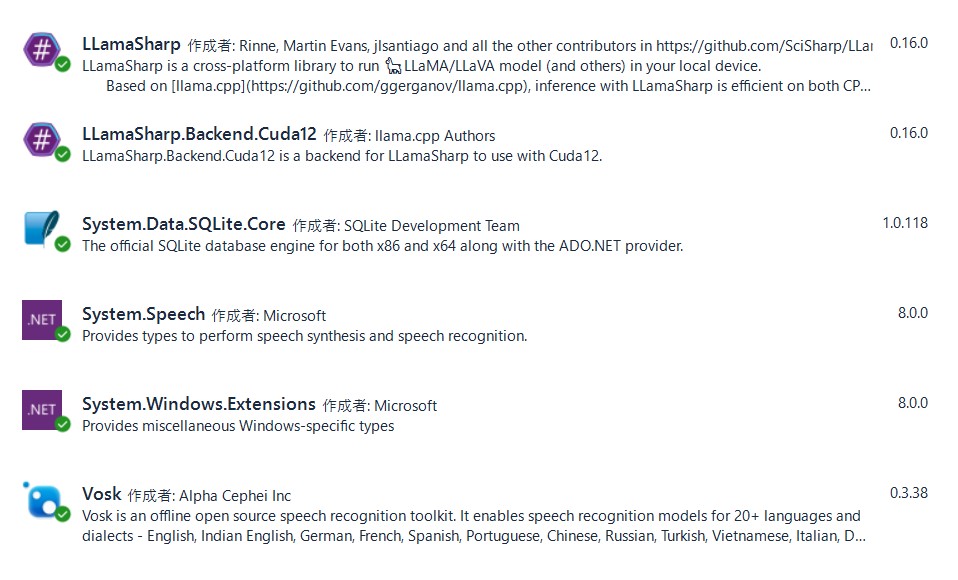

Nuget情報

System.Windows.Extensionsは、VOICEVOX音声を再生するために組み込んでいます。

概要

System.SpeachのSpeechRecognizedイベントを使いVoskで文字起ししてLLMに渡し回答は、VOICEVOXまたはCevioAIまたはVoicePeakで音声再生する。

LLMは、回答速度と会話に優れたVecteus2 筆者が大好きなgemma-2-27b-itを使っています。

Program.cs メイン

2024/9/22更新:マイクとスピーカーがくっついたデバイスを使うとAIが話している声をAIが聞いてしまうのを回避

using LLama.Common;

using LLama;

using System.Media;

using System.Net.Http.Headers;

using System.Diagnostics;

using System.Speech.Recognition;

using System.Text.Json;

using Vosk;

using LLama.Sampling;

namespace ChatProgram

{

public class Program

{

static void Main(string[] args)

{

//コンソールアプリケーションからAsyncを呼び出す

Task task = MainAsync();

//終了を待つ

task.Wait();

}

public static async Task MainAsync()

{

bool Op = true; //本番フラグ

// LLMモデルの場所

string strModelPath = Environment.GetEnvironmentVariable("LLMPATH", System.EnvironmentVariableTarget.User) + @"dahara1\gemma-2-27b-it-gguf-japanese-imatrix\gemma-2-27b-it.f16.Q4_k_m.gguf";

//Vosk設定

Vosk.Vosk.SetLogLevel(-1); //LogメッセージOFF

Model model = new Model(Environment.GetEnvironmentVariable("LLMPATH", System.EnvironmentVariableTarget.User)+@"vosk\vosk-model-ja-0.22");

try

{

//チャットログシステム

string strChatlogPath = Environment.GetEnvironmentVariable("CHATDB", System.EnvironmentVariableTarget.User) + @"SkChatDB.db";

ChatHistoryDB chtDB;

string strTable = "ch";

//AIのターンの時の待ちフラグ、退避ユーザーインプット

bool Wt = false;

Console.ForegroundColor = ConsoleColor.Blue;

//LLMモデルのロードとパラメータの設定

var modPara = new ModelParams(strModelPath)

{

ContextSize = 8192,

Seed = 1337,

GpuLayerCount = 24

};

LLamaWeights llmWeit = LLamaWeights.LoadFromFile(modPara);

LLamaContext llmContx = llmWeit.CreateContext(modPara);

InteractiveExecutor itrEx = new(llmContx);

//チャットログを読み込みます。

ChatHistory chtHis = new ChatHistory();

chtDB = new ChatHistoryDB(strChatlogPath, chtHis, strTable);

ChatSession chtSess = new(itrEx, chtHis);

var varHidewd = new LLamaTransforms.KeywordTextOutputStreamTransform(["User: ", "Assistant: "]);

chtSess.WithOutputTransform(varHidewd);

InferenceParams infPara = new()

{

SamplingPipeline = new DefaultSamplingPipeline()

{

Temperature = 0.9f,

//RepeatPenalty = 1.0f,

},

AntiPrompts = ["User:"],

//AntiPrompts = ["User:", "<|eot_id|>"], //Llama3用

MaxTokens = 256,

};

// SpeechRecognitionの設定

using (SpeechRecognitionEngine recognizer =

new SpeechRecognitionEngine(new System.Globalization.CultureInfo("ja-JP")))

{

recognizer.LoadGrammar(new DictationGrammar());

// ▼▼▼ ここからSpeechRecognizedイベント定義 開始 ▼▼▼

recognizer.SpeechRecognized += async(sender, e) =>

{

if (!Wt)

{

Wt = true;

//RecognizedしたwaveをMemoryStreamに書き込み

MemoryStream st = new MemoryStream();

e.Result.Audio.WriteToWaveStream(st);

st.Position = 0;

// byte buffer

VoskRecognizer rec = new VoskRecognizer(model, 16000.0f);

rec.SetMaxAlternatives(0);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = st.Read(buffer, 0, buffer.Length)) > 0)

rec.AcceptWaveform(buffer, bytesRead);

string strUserInput = "";

// Json形式のResultからテキストを抽出

string jsontext = rec.FinalResult();

var jsondoc = JsonDocument.Parse(jsontext);

if (jsondoc.RootElement.TryGetProperty("text", out var element))

{

strUserInput = element.GetString() ?? "";

}

strUserInput = strUserInput.Replace(" ", "");

Console.ForegroundColor = ConsoleColor.White;

Console.WriteLine($"User: {strUserInput}");

ChatHistory.Message msgText = new(AuthorRole.User, "User: "+ strUserInput);

if (Op) chtDB.WriteHistory(AuthorRole.User, "User: "+ strUserInput);

// 回答の表示

Console.ForegroundColor = ConsoleColor.Yellow;

string strMsg = "";

await foreach (string strText in chtSess.ChatAsync(msgText, infPara))

{

strMsg += strText;

}

//発信するときは「User:」や「Assistant:」を抜く

string strSndmsg = strMsg.Replace("User:", "").Replace("Assistant:", "").Replace("assistant:", "").Trim();

Console.WriteLine(strSndmsg);

if (Op) chtDB.WriteHistory(AuthorRole.Assistant, strMsg);

//VoiceVoxを仕様する場合は、下の2行のコメントを外してCevioAIをコメントアウトしてください

//Task task = Voicevox(strSndmsg);

//task.Wait();

CevioAI(strSndmsg);

//VoicePeak(strSndmsg);

//MemoryStream破棄

st.Close();

st.Dispose();

Wt = false;

}

};

// ▲▲▲ ここからSpeechRecognizedイベント定義 終了 ▲▲▲

// Configure input to the speech recognizer.

recognizer.SetInputToDefaultAudioDevice();

// Start asynchronous, continuous speech recognition.

recognizer.RecognizeAsync(RecognizeMode.Multiple);

Console.WriteLine("★★ マイクに向かって話してください ★★");

// Keep the console window open.

while (true)

{

Console.ReadLine();

}

}

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

public static void CevioAI(string strMsg)

{

try

{

dynamic service = Activator.CreateInstance(Type.GetTypeFromProgID("CeVIO.Talk.RemoteService2.ServiceControl2V40"));

service.StartHost(false);

dynamic talker = Activator.CreateInstance(Type.GetTypeFromProgID("CeVIO.Talk.RemoteService2.Talker2V40"));

talker.Cast = "双葉湊音";

dynamic result = talker.Speak(strMsg);

result.Wait();

//開放忘れるとメモリリーク

System.Runtime.InteropServices.Marshal.ReleaseComObject(talker);

System.Runtime.InteropServices.Marshal.ReleaseComObject(service);

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

public static void VoicePeak(string strMsg)

{

string wavFileName = Environment.GetEnvironmentVariable("TESTDATA", System.EnvironmentVariableTarget.User) + @"voicepeak.wav";

try

{

var processSI = new ProcessStartInfo

{

FileName = @"E:\Program Files\VOICEPEAK\voicepeak.exe",

Arguments = $"-s \"{strMsg}\" -o \"{wavFileName}\"",

UseShellExecute = false,

RedirectStandardOutput = true,

CreateNoWindow = true

};

using (var process = Process.Start(processSI))

{

process.WaitForExit();

};

var player = new SoundPlayer(wavFileName);

//再生する

player.PlaySync();

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

public static async Task Voicevox(string strMsg)

{

MemoryStream? ms;

try

{

using (var httpClient = new HttpClient())

{

string strQuery;

int intSpeaker = 8; //春日部つむぎ

// 音声クエリを生成

using (var varRequest = new HttpRequestMessage(new HttpMethod("POST"), $"http://localhost:50021/audio_query?text={strMsg}&speaker={intSpeaker}&speedScale=1.1&prePhonemeLength=0&postPhonemeLength=0&intonationScale=1.16&enable_interrogative_upspeak=true"))

{

varRequest.Headers.TryAddWithoutValidation("accept", "application/json");

varRequest.Content = new StringContent("");

varRequest.Content.Headers.ContentType = MediaTypeHeaderValue.Parse("application/x-www-form-urlencoded");

var response = await httpClient.SendAsync(varRequest);

strQuery = response.Content.ReadAsStringAsync().Result;

}

// 音声クエリから音声合成

using (var request = new HttpRequestMessage(new HttpMethod("POST"), $"http://localhost:50021/synthesis?speaker={intSpeaker}&enable_interrogative_upspeak=true&speedScale=1.1&prePhonemeLength=0&postPhonemeLength=0&intonationScale=1.16"))

{

request.Headers.TryAddWithoutValidation("accept", "audio/wav");

request.Content = new StringContent(strQuery);

request.Content.Headers.ContentType = MediaTypeHeaderValue.Parse("application/json");

var response = await httpClient.SendAsync(request);

// 音声を保存

using (ms = new MemoryStream())

{

using (var httpStream = await response.Content.ReadAsStreamAsync())

{

httpStream.CopyTo(ms);

ms.Flush();

}

}

}

}

ms = new MemoryStream(ms.ToArray());

//読み込む

var player = new SoundPlayer(ms);

//再生する

player.PlaySync();

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

}

}

}

チャット履歴をSQLiteで管理している部分

ChatHistoryDB.cs

using LLama.Common;

using System.Data.SQLite;

namespace ChatProgram

{

class ChatHistoryDB

{

ChatHistory? chtHis;

string strDbpath;

Dictionary<string, AuthorRole>? Roles = new Dictionary<string, AuthorRole> { { "System", AuthorRole.System }, { "User", AuthorRole.User }, { "Assistant", AuthorRole.Assistant } };

string strTable;

public ChatHistoryDB(string strDbpath, ChatHistory chtHis, string strTable)

{

this.chtHis= chtHis;

this.strDbpath= strDbpath;

this.strTable= strTable;

try

{

var conSb = new SQLiteConnectionStringBuilder { DataSource = strDbpath };

var con = new SQLiteConnection(conSb.ToString());

con.Open();

using (var cmd = new SQLiteCommand(con))

{

cmd.CommandText = $"CREATE TABLE IF NOT EXISTS {strTable}(" +

"\"sq\" INTEGER," +

"\"dt\" TEXT NOT NULL," +

"\"id\" TEXT NOT NULL," +

"\"msg\" TEXT," +

"\"flg\" INTEGER DEFAULT 0, PRIMARY KEY(\"sq\"))";

cmd.ExecuteNonQuery();

cmd.CommandText = $"select count(*) from {strTable}";

using (var reader = cmd.ExecuteReader())

{

//一行も存在しない場合はシステム行をセットアップ

long reccount = 0;

if (reader.Read()) reccount = (long)reader[0];

reader.Close();

if (reccount<1)

{

//Assistantの性格セットアップ行追加

cmd.CommandText = $"insert into {strTable}(dt,id,msg) values(datetime('now', 'localtime'),'System','あなたは優秀なアシスタントです。')";

cmd.ExecuteNonQuery();

//要約行追加

cmd.CommandText = $"insert into {strTable}(dt,id,msg) values(datetime('now', 'localtime'),'System','')";

cmd.ExecuteNonQuery();

}

}

cmd.CommandText = $"select * from {strTable} where flg=0 order by sq";

using (var reader = cmd.ExecuteReader())

{

while (reader.Read())

{

if (chtHis is null) chtHis=new ChatHistory();

chtHis.AddMessage(Roles[(string)reader["id"]], (string)reader["msg"]);

}

}

}

con.Close();

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

public interface IDisposable

{

void Dispose();

}

public void WriteHistory(AuthorRole aurID, string strMsg, bool booHis = false)

{

try

{

var conSb = new SQLiteConnectionStringBuilder { DataSource = strDbpath };

var con = new SQLiteConnection(conSb.ToString());

con.Open();

using (var cmd = new SQLiteCommand(con))

{

if (booHis)

{

if (chtHis is null) chtHis=new ChatHistory();

chtHis.AddMessage(aurID, strMsg);

}

cmd.CommandText = $"insert into {strTable}(dt,id,msg) values(datetime('now', 'localtime'),'{Roles.FirstOrDefault(v => v.Value.Equals(aurID)).Key}','{strMsg}')";

cmd.ExecuteNonQuery();

}

con.Close();

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

}

}

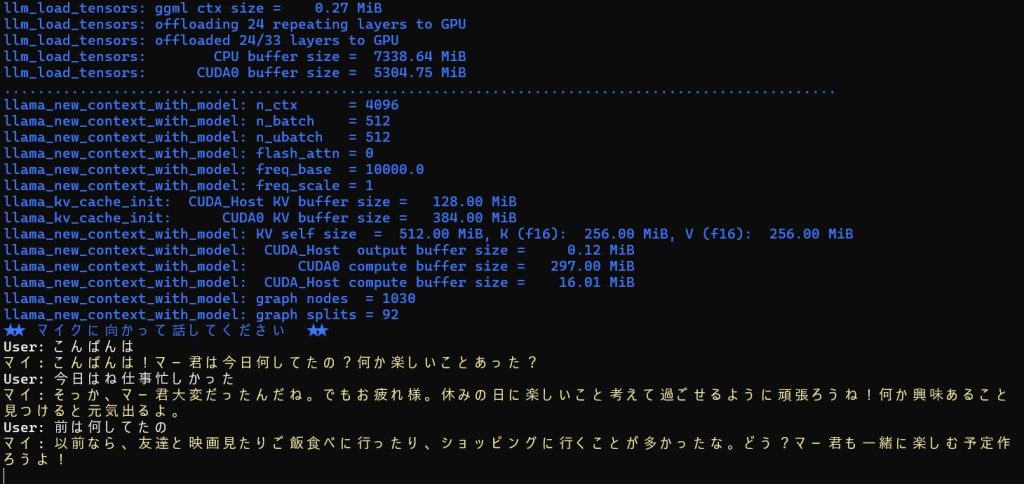

結果

ところどころ聞き間違いがありますが、わりとスムーズに会話ができました。

少しはcotomoに近づけたかなあ・・・

(誤)前は何してたの ⇒ (正)マイは何してたの